Nonuniqueness

An inversion attempts to solve a linear system of equations where we have fewer equations than we have unknowns . In the app the default number of parameters was 100 while the number of data was 20. The system of equations is underdetermined and there is an infinite number of models that can fit the data.

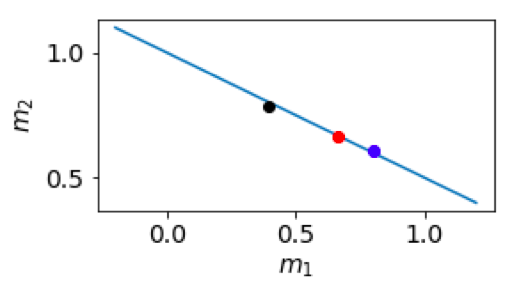

This nonuniqueness, and insight about how to deal with it, is exemplified by a toy example consisting of two model parameters and one datum . What is the solution to this problem? In fact any point along the straight line in Figure 1 below is a valid solution. Possibilities include or .

Figure 1:Nonuniqueness

If our goal is to find a single “best” answer to our inverse problem then we clearly need to incorporate additional information as a constraint. A metaphor that is sometimes helpful is the problem of selecting a specific person in a school classroom as portrayed in the image below cite (no label)Figure %s <8Fvod2SfmrYQX8isU0AC>``. To select a single individual via an optimization framework, we need to define a ruler by which to measure each candidate. A ruler, when applied to any member of the set, generates a single number, and this allows us to find the biggest or smallest member as evaluated with that ruler. The potential rulers are unlimited in number and they could be associated with: height, age, length of fingers, amount of hair, number of wrinkles, etc. In general, choosing a different ruler yields a different solution; although it is possible that the youngest person is also the shortest.

Figure 2:Selecting a specific person in a school classroom.

The analogy with vectors is straight forward. Rulers for measuring length of vectors are quantified via a norm. For instance in the toy example above, the smallest Euclidean length vector that lies along the constraint line is shown by the red dot cite (no label)Figure %s ``. Of all of the points it is the one that is closest to the origin, that is, the one for which is smallest.

A methodology by which we can get a solution to the inverse problem is now clear. We define a “ruler” that can measure the size of each element in our solution space. We choose the one that has the smallest length and that still adequately fits the data. The name for our “ruler” varies but any of the following descriptors are valid: model norm, model objective function, regularization function or regularizer. We’ll use the term “model norm” since we are explicitly concerned the ranking the elements by their “size”.